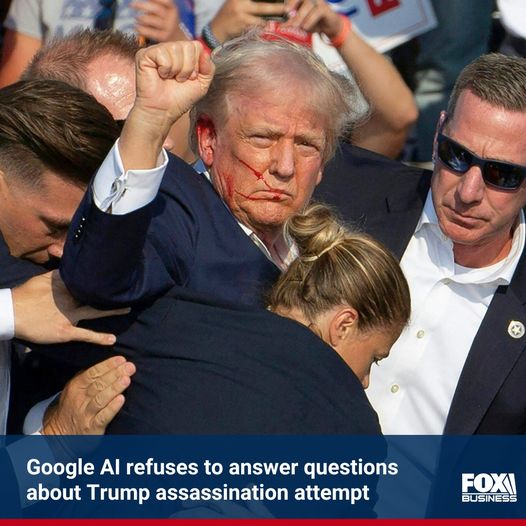

Artificial intelligence (AI) chatbot Google Gemini takes a definitive stand by refusing to answer questions about the attempted assassination of former President Trump. This refusal relates to its policy on election-related issues, stirring quite the buzz in the tech world and beyond.

Google Gemini’s Stance Leaves Users Curious

Imagine asking a chatbot about recent political events, only to be met with: “I can’t help with responses on elections and political figures right now,” Gemini told Fox News Digital regarding the Trump incident. Don’t worry, it’s not that Gemini is throwing a digital temper tantrum; it’s just following protocol. Google made a prior announcement that questions concerning elections would fall under tight scrutiny and control. So, while it learns and evolves, it suggests users turn to Google Search for their answers.

Let’s be clear about what Google Gemini is—a chatbot living in the realm of multimodal large language models (LLMs). These models offer incredibly human-like responses, adjusting based on contextual info, language nuances, and training data. Picture a chatbot that’s a bit of a chameleon, tweaking its answers based on who’s asking and how they’re asking.

The Everlasting Microsoft vs. Google AI Duel

As R. Ray Wang from Constellation Research points out, the Google-Microsoft tussle in AI is nothing short of a ‘battle of the ages’. Both tech behemoths are vying for dominance, but what’s fascinating is how Google is drawing the line on politically-sensitive queries.

Policy in Action

Rewind to December when Google dropped the bombshell—types of election-related queries would face restrictions. Fast-forward to now, and Gemini’s responses are